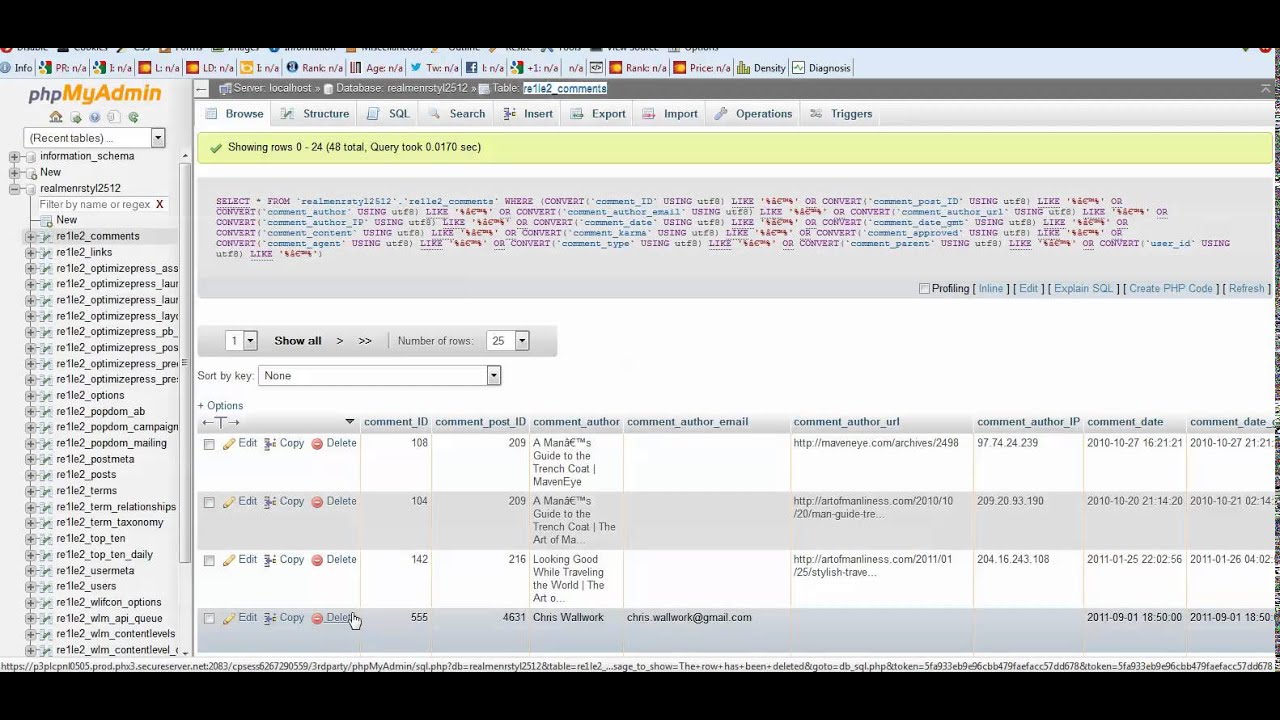

Troubleshooting Search Issues & Character Encoding Problems In SQL Server

Have you ever encountered a situation where the characters on your screen appear distorted, replaced by a series of seemingly random symbols? This perplexing issue, frequently manifesting as a string of Latin characters like those starting with \u00e3 or \u00e2, can be a frustrating hurdle in the digital landscape, but understanding its root cause and the available solutions is crucial for a smooth user experience.

This problem isn't uncommon. It stems from a mismatch between the character encoding used by the system and the actual data being displayed. Imagine trying to read a book written in a language you don't understand, where the letters are garbled and the meaning is lost. The same principle applies here. Different systems and applications utilize different character encodings, which are essentially ways of mapping characters to numerical values. When these encodings don't align, the result is the gibberish you see on your screen. This often happens when data is stored, transmitted, or displayed using an encoding that differs from what the user's system expects.

The issue frequently arises when dealing with data from different sources, databases, or even when transferring information across different operating systems. For instance, if a database is set to use a particular character set and the application retrieving the data is configured differently, the characters may not render correctly. Similarly, if a file containing special characters (such as accents or other diacritics) is opened in a program that doesn't support the encoding used to create the file, these characters will be misrepresented.

One common scenario where this problem surfaces is when working with databases like SQL Server 2017, especially when the collation is set to `sql_latin1_general_cp1_ci_as`. This collation, while widely used, may not always handle certain characters correctly. The collation setting dictates how the database sorts and compares characters, and it also influences the character set that is supported. When the collation doesn't fully align with the character encoding used in the data, those distorted characters will inevitably appear. This typically happens with characters beyond the basic ASCII range, like those found in languages that utilize accented characters or special symbols. Languages like Portuguese (with characters like '') are frequently affected by this. This situation is exactly what the user had experienced. The user realized that by fixing the charset in the table for future input data.

Let's delve into the specific instance of Portuguese, where the tilde (~) plays a crucial role. The nasal vowels, represented by letters like "" and "," are fundamental to pronunciation and meaning. These characters, when rendered incorrectly, can drastically alter the meaning of a word. Consider the examples provided: l (wool), irm (sister), lmpada (lamp), and So Paulo (Saint Paul). If these characters are misrepresented, the words become unintelligible, and the reader loses the ability to grasp the intended meaning. The tilde transforms the sounds of the letters, adding a nasal quality to the vowels. These diacritics are not merely decorative; they are integral to the Portuguese language, and their accurate representation is vital.

Consider other languages, like French, Spanish, German and others. It's equally vital in the context of other languages. In French, accents (, , , etc.) and in Spanish, accents (, , , , , , ) are vital for correct pronunciation. In German umlauts (, , ) are essential. Failing to display these characters correctly can result in a significant loss of meaning. The challenge is to ensure these are properly stored and displayed for correct communication.

To address these character encoding issues, a range of methods can be employed. Often, the solution involves correctly identifying the character encoding of the data and ensuring that the system or application used to display the data is configured to interpret the data using the same encoding. Another approach is to explicitly convert the data to a more universally compatible encoding, such as UTF-8. UTF-8 is a widely used encoding that supports a vast array of characters from different languages, making it an excellent choice for handling diverse data.

SQL Queries are frequently used to deal with database-related issues, it's worth taking a look at a few SQL queries that can help in fixing the common problems that relate to character encoding:.

Here are some examples of SQL queries fixing common character encoding problems. These queries are designed to help troubleshoot and resolve data display issues when using SQL Server and other database systems. They address scenarios where characters are incorrectly rendered due to encoding mismatches or collation problems. The following queries are intended to correct and ensure the correct representation of special characters.

Query 1: Identify Incorrect Characters This query helps identify rows where specific characters are not being displayed correctly. For example, it can be used to find rows with the "" character in a column:

sql SELECT * FROM your_table WHERE your_column LIKE '%%';

Query 2: Correcting Character Encoding This query shows how to modify a column's data to a suitable character set, like UTF-8 (though the exact function name might vary depending on your database system). The following are example and may not work on your case directly.

sql -- Example for SQL Server (adapt syntax as needed for other DBs) ALTER TABLE your_table ALTER COLUMN your_column VARCHAR(255) COLLATE Latin1_General_CI_AS; -- Or the appropriate collation

Query 3: Converting Data to UTF-8 This query is for converting the data to a common character set.

sql -- Example for SQL Server UPDATE your_table SET your_column = CONVERT(VARCHAR(255), your_column, 125) -- You might also need to convert the column to NVARCHAR if the data contains a wide range of characters.

Query 4: Checking and Changing Database Collation This query checks the collation of a database and changes it.

sql -- Check Database Collation SELECT name, collation_name FROM sys.databases WHERE name = 'your_database_name'; -- Change Database Collation (e.g., to UTF-8) ALTER DATABASE your_database_name COLLATE Latin1_General_CI_AS;

Query 5: Identifying and Fixing Incorrect Characters (Alternative Approach) This query is an alternative for identifying or fixing incorrect characters in column.

sql -- Use REPLACE to correct a specific character UPDATE your_table SET your_column = REPLACE(your_column, '', '') -- Example: Replace "" with "" WHERE your_column LIKE '%%';

These SQL queries are great to help identify issues with the characters. But to ensure they are correctly displayed, you have to make sure that the system, or application being used supports the right character encoding. This includes the correct configuration of your database, the application reading the data, and any intermediate layers involved in data transmission. In addition, it is recommended to use UTF-8 encoding. This encoding is very flexible and is highly compatible with different systems and languages. This offers wide range of characters.

In the realm of web development, the choice of character encoding is equally vital. The `` tag in the HTML `

` section plays a crucial role in telling web browsers how to interpret the characters in your content. Setting the `charset` attribute to `UTF-8`, as in ``, is often the most effective approach. This signals to the browser that your web page uses UTF-8 encoding, allowing it to render a diverse range of characters correctly.Here is a scenario where this can be very useful. You are a website developer for a travel agency based in Paris, France. Your website contains descriptions of various destinations, written in both French and English. The French descriptions contain numerous accented characters (, , , , etc.). If you set the character set to UTF-8 in the `

` of your HTML pages, the browser can accurately display these characters, ensuring your French content is easily readable. Without this setting, your users would experience the distorted characters mentioned previously.In database environments, the database collation should be compatible with the character set declared in your application. Consider a scenario where you are managing a database of customer names. The database uses UTF-8 encoding to store a wide range of names correctly. The application connecting to the database must also be configured to communicate using UTF-8. This ensures that the special characters are correctly transmitted. If the application is configured to use a different character set, it will not be able to interpret the characters as intended. This can lead to data corruption, search errors, and user confusion.

Furthermore, when transferring data, character encoding issues can arise during the data transmission process. This is particularly relevant for data exchange between different systems. Imagine transferring data from a legacy system that uses a specific character set to a modern system that uses UTF-8. Without proper conversion during transmission, the characters could be misinterpreted, resulting in data corruption or incorrect display on the receiving end. Using proper handling of character encoding during data transfer is essential to ensure data integrity. Tools like file converters can convert data from one encoding to another. Also, utilizing data transfer protocols that support character encoding ensure the smooth flow of data.

Let's say you're working on a project to analyze social media data to detect sentiment. This analysis involves the use of emojis. Because you are using emojis which are stored and read using UTF-8 encoding, you must make sure that the systems handling the text data correctly supports UTF-8, else you will not see the characters, and the sentiment analysis will fail. This means that the database, applications, and all the tools involved in the text processing must be properly configured.

In addition to these technical solutions, it's worth noting that the availability of support from online communities and resources, such as Stack Overflow, can be very beneficial. Many users face these character encoding problems, and there is a vast amount of information available online to assist in diagnosing and resolving them. Online forums allow users to share their challenges and provide solutions. There are many articles about encoding problems and solutions. It's an excellent way to learn the fundamentals and find practical help to resolve character encoding issues.

The issue of character encoding might seem like a technical detail, but it has a profound impact on how we interact with digital information. Ensuring that characters are correctly displayed is crucial for effective communication, data integrity, and a smooth user experience. It means setting the right character encoding, using the appropriate configurations, and understanding the underlying mechanisms that govern data storage and display. By understanding these principles, we can better navigate the digital world, ensuring that we can accurately represent and understand the diverse languages and symbols that make up our global online environment.